The hottest new programming language is English

September 02, 2024 | View Comments

The perhaps art of programming

"Sparse is better than dense." -- Tim Peters

I'm a programmer at heart. I care about the craft, a lot. At the age of 43, I'll still get myself into lengthy discussions with juniors about why this particluar line of code is too long, or why our linter's default settings aren't as good as maybe their intuition.

Other programmers are less engaged perhaps in style, though hopefully they still appreciate that readability counts, a lot. These programmers will sometimes abstract away some of the details of coding style via linters that apply changes to the code automatically. This relieves them from having to care about whether to put a space between an assignment operator or not.

I grew up as a programmer without a linter, and honestly being thoughtful of where to apply whitespace and where not is to such a large extend ingrained in my muscle memory that I can't comprehend how these people's brains function who write code for a living, but don't really care about what it looks like. So perhaps my point with a wink is, after all: Maybe these folks are still on their journey to appreciating that readability counts. (And do also consider that you're reading your own code many times while you write it, before you use linting.)

At this point you're thinking: what is this dude on about? Should this really be a blog post about my bitterness around whitespace? It's not.

It's about putting two spaces after a period!

Okay okay, that was also a kind of a joke, though very much related to what I just said.

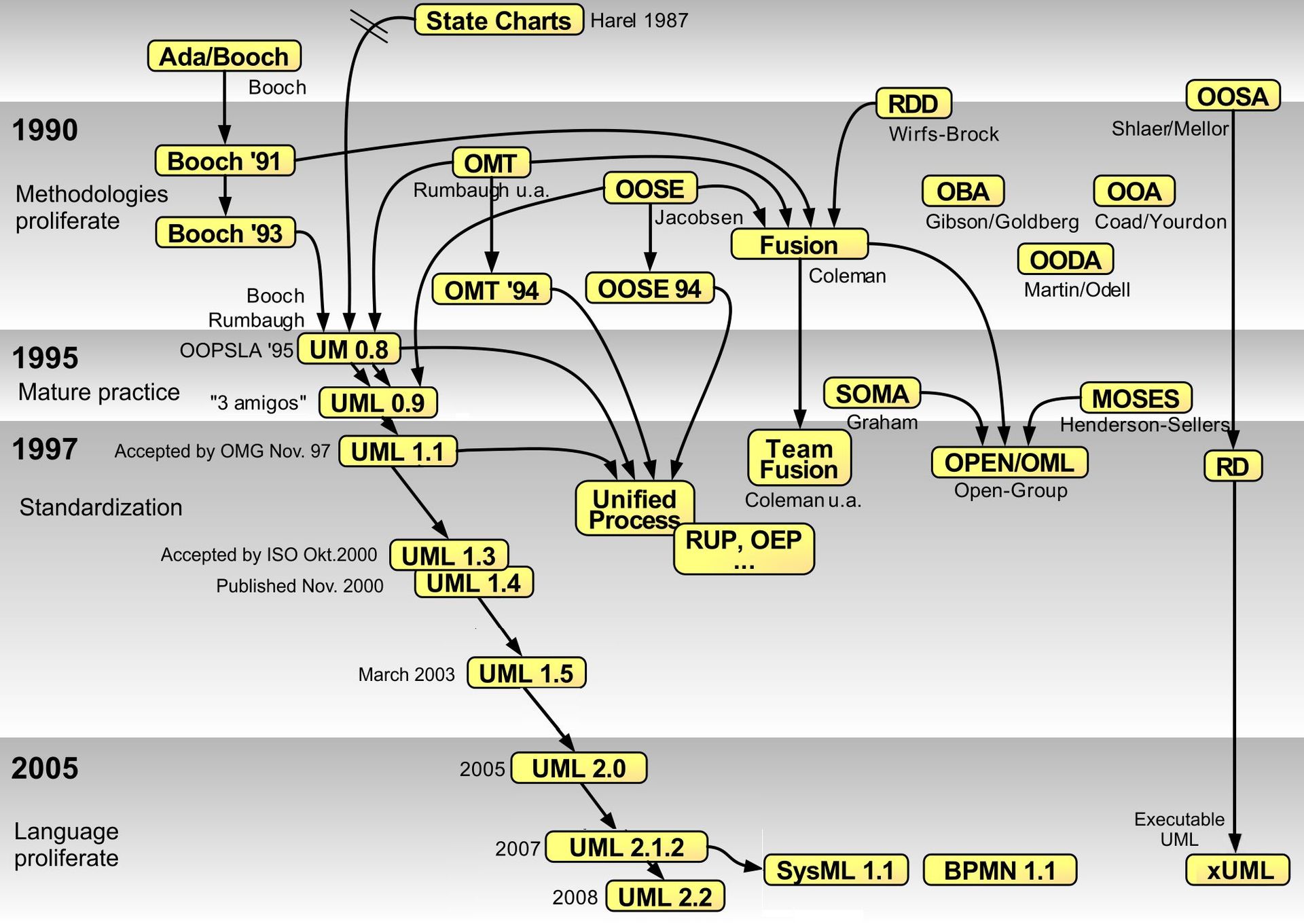

One thing that was pretty hot about two and a half decades ago, when I started doing software development, was the Unified Modeling Language (UML), and also tooling such as in Rational Rose that would take UML diagrams and generate boilerplate code from it, and vice versa. I never liked this idea either. It went against my desire to craft code carefully, and to my aversion against fancy *klickibunti* tools for writing software, such as integrated development environments (IDEs).

As an aside, luckily I wasn't alone in this space of aversion. The idea that we shouldn't need fancy tools to write code seemed to be at the core of the Python programming language's philosophy at the time, namely that code should be concise and approachable to the point where one only needs a simple text editor with a search function and perhaps grep to be as effective as the next person. I feel that today this idea is sadly lost among the noise and affinity for tooling such as linters and heavy IDEs, which have become the norm for starting Python programmers. (Distracting autocomplete and popups are another favourite topic of mine!)

Prompt engineering reduces your problem to the skill of good writing

"The hottest new programming language is English" -- Andrej Karpathy

Now I did blog about an unfinished experiment lately where I generated (untested) code for parts of a game using generative pre-trained transformers (or LLMs). At work, we continue using machine learning and LLMs in many different applications, such that it feels like a good idea to eat our own dogfood off-work too. In other words, using LLMs for generating code for work or hobby projects, or even using it privately to answer one of my kid's great questions about life things (and letting them do it themselves in a safe way) is a great way of learning by doing the art of what's called prompt engineering.

I don't think many users of LLMs (or SLMs) appreciate yet that, while prompt engineering is perhaps not a very technical skill, it's still very much a learned skill which can't be replaced by copying and pasting snippets, but can be learned best by doing, and making mistakes and trying again. The skill of writing prompts is naturally closely related to the skill of expressing ourselves in language in general, including at work. (A colleague gave me the idea of using "please" to address an LLM, such that the chat conversation with the LLM would be attuned to a friendly tone. Same colleague was also implying that I should say "please" more often myself. :-)

By the way, Nicholas Carlini has a really nice though lengthy article on the topic of how he uses AI that I recommended already a few times.

Evidently, prompt engineering is a very hot topic and techniques and tricks continue to be discussed online since perhaps a year. One thing I can probably add to this existing breadth of information out there, and consider that due to a hype, some of those articles might not always be of high quality, is the following advice for learning prompt engineering:

Don't look too much for example prompts to literally copy and paste from. Take your time to try and develop the skill of expressing yourself clearly, to the machine and to humans. Perhaps start writing a blog too. Perhaps also try to learn to type faster, so you're less tempted to copy and paste. These are all learned skills! And when you succeed in dedicating some time to these, you might even be rewarded by becoming a better human!

Collaborative prompt engineering

"Readability counts." -- Tim Peters

Now one idea for how to try and learn prompt engineering together at work with your colleagues is this: Create a #prompt-engineering channel in your chat server, perhaps you're using Slack, and then when anyone of your colleagues or yourself encounters a new idea of a prompt and tries it, they probably learned a lesson or two, they will write the prompt and the results down into that new channel, and this way share their learning with everyone. It's like sharing a nice essay, and your colleagues can also learn from your own trial and errors, since what you're sharing is at a minimum: this is what worked for me.

Another advice for collaboration is this: Try not to bottleneck the work of prompt engineering and evaluation of said prompts to programmers or AI specialist. Instead, make accessible your prompts to everyone in your team who is curious, perhaps those close to the business problems you're trying to solve. And then you'll also find yourself participating in the (generally important) activity of demystifying AI for your colleagues in general. They will probably appreciate it. They might understand that LLMs can be a more effective partner in their life. And they will find themselves with maybe more nuance and background when it comes to the topic of AI in public.

How I wrote my first non-trivial Python script of 105 lines with an LLM

"Attention Is All You Need." -- Team Transformer

Yesterday I decided to do an experiment. I had a problem at work which I wanted to solve. The problem is a typical programming problem which involves monitoring resource usage of processes on a computer. For those curious, there's some documentation here around what this program that we wrote yesterday does exactly.

So the experiment wasn't writing the program, but it was the idea of generating a non-trivial Python script entirely using an LLM that happened to be Claude 3.5 Sonnet. The rules: I wouldn't be allowed to make any changes to the code after the fact. The code would have to be generated by the LLM functionally correct and without bugs entirely! And: I would use only one prompt, and no chatting after the initial prompt, to keep things reproducible. And so doing that took much of my afternoon, but boy was it worth it! I found myself iterating many times, and this was the process:

Iterations of prompt engineering for code generation

"Test-Driven Development makes the hard part easy, and the easy part hard." -- Kent Beck

After writing a kind of sloppy and too dense, and in hindsight very ambiguous initial prompt, I started looking not at the produced code so much as I tried out the code itself, blindly, as if I was a user and not a programmer. This would then help me understand where I was too ambiguous in my request to the LLM. It also helped me understand the kinds of bugs that the generated code had. The point is: I was ready not to give up and write the program myself, or modify the generated code, although that was tempting; I was determined to continue refining the prompt instead. I just needed to understand what was wrong about my prompt: the lack of detail here, an ambiguous phrase there, etc.

Another trick that I remembered is this: To ask the LLM to think through the problem first, as in a prompting technique called "chain-of-thought." From an article on HuggingFace.com:

Chain-of-thought (CoT) prompting is a technique that nudges a model to produce intermediate reasoning steps thus improving the results on complex reasoning tasks.

So how would we apply this idea to our problem of generating code? Code review!. As a bit of a fun aside, I asked the LLM to first generate a version of the code written by a perhaps non-senior programmer. Then I had the LLM imagine a code review in the form of a dialogue between the author of that code and another programmer, this time Guido van Rossum. :-)

The results were pretty good. However, my asking for a virtual code review was clearly not enough to solve every problem that the code still had at that point. Remember that Claude couldn't run the program, it didn't see the output, including errors that the program produced. That was still my job: I would paste the final generated code, after the virtual code review, into my editor and run it. I saw that there was some missing features which I may have actually requested but maybe not explicitly enough. And there were bugs which I could hint the LLM at.

For the curious: For quite a while, Claude didn't get that my intention was to measure the resource consumption not only of the process that is launched by the script, but also all subprocesses of that process. I had to be very explicit about that one, but finally found a satisfying solution which at that point would likely have taken me more time to read the psutil docs about and correct by hand.

One issue now turned out to be that the output often exceeded the size limits of what's allowed by the Claude service. Thus, I toned the reviewing scenario down a bit by removing the request for a first version, and reducing that part of the prompt to:

I want you to reason about the code first and write an internal dialogue with yourself and Guido van Rossum. Think about possible bugs. Avoid overengineering.

Finally, here's the entire prompt that yielded my final script bar some whitespace changes and reordering of imports:

We want to write a command line tool and Python package that allows us to do the following:

Monitor a command that is passed as part of the arguments and collect the most important process statistics over time, e.g. every 0.01 seconds.

Be excellent in understanding these requirements. Be great in writing the implementation!

We want these features:

- The command may spawn subprocesses. Make sure to use the most reliable way to collect stats for the subprocess and all its subprocesses.

- Collect stats such as memory usage, CPU usage, I/O. Make sure that you present these values in a human readable way eventually.

- You must aggregate stats from the process and all its subprocesses, including aggregating CPU stats and others in a meaningful way! Otherwise we'll get 0 in the outputs and be inaccurate!

- Do regular output to allow the user to see the current values of these stats while the programming is running.

- Allow the user to interrupt collection via KeyboardInterrupt, and finish. But mostly rely on a clever way of detecting whether the process to measure is finished, and automatically continue the program as if interrupted by the user.

- The final processing includes creating plots using matplotlib that show the measurements over time, with time on the x axis and the values, such as memory usage in MB, on the y axis. Make the plots beautiful and elegant.

- Create a single script, that is simple and scrappy, but absolutely bug-free with regard to aggregation of values into meaningful values for display in the console using dynamic updates using carriage returns.

- Also print summary statistics at the end of the program to the standard output using tabulate. Include max, and mean for all stats.

I want you to reason about the code first and write an internal dialogue with yourself and Guido van Rossum. Think about possible bugs. Avoid overengineering.

Run example:

python3 monitor.py python3 hacks/bartowski.py Phi-3.5-mini-instruct-Q4_K_M.gguf

The beauty of this prompt strikes me as similar to the beauty of test driven development (TDD). The idea in TDD is that thinking about the problem first (sound familiar?), or more concretely: thinking about the function or module that you want to write, helps you in refining your understanding of the problem itself. It's like putting yourself into the shoes of the user of a function, before you might get lost in the details of the implementation.

Here, the analogy is that we think about the specification of our program first, the problem we try to solve. Only then do we try to solve it. Repeat that a few times. By the way, I also love how the prompt is now a pretty accurate description of the requirements of the program itself, and thus can be used to communicate those.

Lastly, I ran this (handcrafted, no less) article through Claude and asked it for a review. It turns out that Claude didn't have a lot of recommendations apart from explaining what a linter is, and apart from adding a final section with conclusions, which I didn't do. I guess I was mostly looking for praise when I wrote this prompt for a review: :-)

Here's a blog post that I wrote about my recent experience with you, Claude. It's written in reStructuredText. Can you please take a close look at the article? Tell me maybe paragraph by paragraph what you liked, but also give me feedback on what things I could improve. If you find any syntax problems, let me know as well. Consider that the audience includes non-technical folks that use LLMs perhaps in a non-optimal way today, and that same folks would like to understand more. Do not try to dumb the article down, however. Keep the paragraphs as is, and recommend changes only sparingly, where they are important:

<PASTED THE ARTICLE HERE>